Background

I’ve been helping a family member figure out why their computer keeps crashing off and on for several months. The machine was just over 3 years old when it started having issues. It’s a Dell Studio XPS 8000 that otherwise has been rock solid until this point. During a previous visit I replaced the hard drive and upgraded the OS from Windows Vista x64 to Windows 7 x64 via a clean install. The machine case has been cleaned inside and outside periodically, is relatively free of dust, and has adequate ventilation. None of the case fans or CPU fans show signs of failure. The video card fan was pretty dusty and sometimes squeaked, but seemed to settle down after a little bit of canned air.

When the crashes first started in October 2012, I went through the usual deal. I checked the Event Log to see if anything jumped out as the culprit in the logs, and nothing did. In fact there was neither a BugCheck (Event ID 1001) entry or a minidump and the Kernel-Power (Event ID 41) entry had 0 for all it’s bugcheck info. I determined what the most recently installed program that had a driver was and uninstalled it, with no effect. I then updated all the drivers on the machine, updated the BIOS, and used Secunia’s PSI to make sure the OS and all it’s applications were fully up to date.

The crashes continued to happen periodically – sometimes multiple times a day, sometimes not for several days. To up the difficulty level, the machine is located thousands of miles away on a rather slow DSL line.

Deep Dive: Level 1

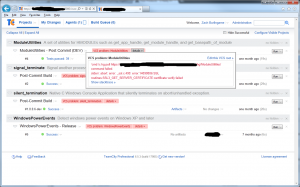

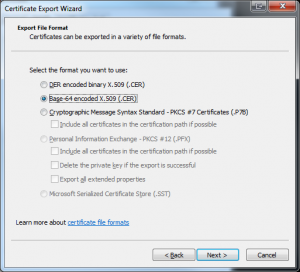

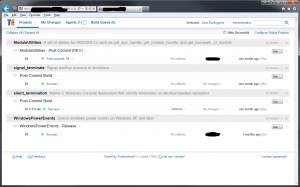

Since I didn’t have physical access to the machine, I was going to have to be resourceful to get the information I need to start debugging this. The first thing I tried was using Fog Creek’s Copilot to remote to the machine and poke around. I’ve generally found Copilot to work very well for me when I’m providing remote assistance. However, triaging a machine over a 768Kbps DSL line with latency north of 300ms ultimately proved painful. Certain operations like scrolling through the Event Viewer logs are just brutal to screen diffing algorithms. I switched up tactics and I had them install Microsoft’s Sysinternals Suite, run Autoruns and Process Explorer, and export both of their results for me. I also had them export the Application and System logs from Event Viewer so that I could view them on my local machine. Unfortunately, almost all of the crash dumps had already been auto-submitted to Microsoft and purged from the file system. The one crash dump that was still around was a mini-dump so I went ahead and enabled kernel memory dumps so that any future crash would be captured with more (hopefully useful) information.

My initial inspection of the single mini-dump didn’t yield any obvious answer (more on that in Part 2), so I started looking at the event log. There had been 36 Kernel-Power (Event ID 41) events in a two month period (October 9th – December 9th 2012). If you view the Details tab of the event properties, you can see the bugcheck code and parameters. The vast majority of the 36 events were BugCheckCode 0 with a PowerButtonTimestamp of 0. This indicates either a hard hang of the machine or a power outage. (Had the machine been powered off by using the power button, you generally get a PowerButtonTimestamp value). The 8 events that did have BugCheckCode entries had several different stop conditions as shown below. NOTE:Rather annoyingly, EventID 41 displays the stop code in decimal, not hex. I’ve put the hex value in [] next to the decimal value for clarity.

1x Bugcheck 80 [0x50] (0xfffff80022fb4fe0, 0x0, 0xfffff80002e5586b, 0x2)

1x Bugcheck 59 [0x3B] (0xc000001d, 0xfffff9600007a7e0, 0xfffff8800716df50, 0x0)

1x Bugcheck 194 [C2] (0x7, 0x109b, 0x4323440, 0xfffffa8003649c70)

2x Bugcheck 209 [D1] (0x0, 0x2, 0x8, 0x0)

1x Bugcheck 30 [1E] (0xffffffffc000001d, 0xfffff80002f00c29, 0x0, 0xfffffa8005476c00)

1x Bugcheck 80 [0x50] (0xfffff12014be480c, 0x1, 0xfffff8800121899d, 0x7)

1x Bugcheck 59 [0x3B] (0xc0000005, 0xfffff800031842b1, 0xfffff88007a64ef0, 0x0)

There were also 8 BugCheck (Event ID 1001) events in that time frame:

11/05/2012 3:21:23 PM The bugcheck was: 0x00000050 (0xfffff80022fb4fe0, 0x0000000000000000, 0xfffff80002e5586b, 0x0000000000000002).

11/16/2012 10:16:35 AM The bugcheck was: 0x0000003b (0x00000000c000001d, 0xfffff9600007a7e0, 0xfffff8800716df50, 0x0000000000000000).

11/18/2012 4:39:51 PM The bugcheck was: 0x000000c2 (0x0000000000000007, 0x000000000000109b, 0x0000000004323440, 0xfffffa8003649c70).

11/18/2012 4:41:38 PM The bugcheck was: 0x000000d1 (0x0000000000000000, 0x0000000000000002, 0x0000000000000008, 0x0000000000000000).

11/30/2012 12:09:51 AM The bugcheck was: 0x000000d1 (0x0000000000000000, 0x0000000000000002, 0x0000000000000008, 0x0000000000000000).

12/02/2012 3:47:26 PM The bugcheck was: 0x0000001e (0xffffffffc000001d, 0xfffff80002f00c29, 0x0000000000000000, 0xfffffa8005476c00).

12/06/2012 1:33:05 PM The bugcheck was: 0x00000050 (0xfffff12014be480c, 0x0000000000000001, 0xfffff8800121899d, 0x0000000000000007).

12/09/2012 5:17:29 PM The bugcheck was: 0x0000003b (0x00000000c0000005, 0xfffff800031842b1, 0xfffff88007a64ef0, 0x0000000000000000).

Nothing initially jumped out at me because the stop codes varied and I didn’t have any crash dumps to correlate them with. I started working through the stop code documentation for each event to see if there was some commonality.

Stop Code 0xC2

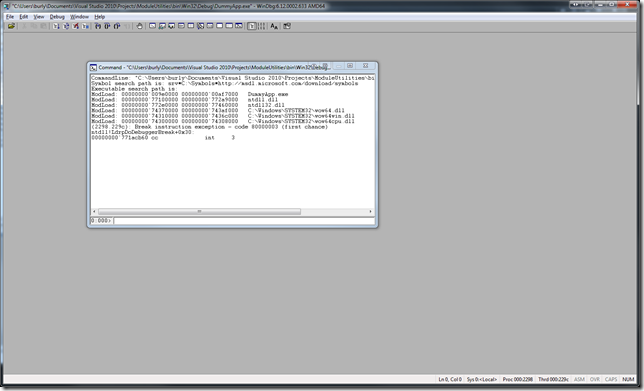

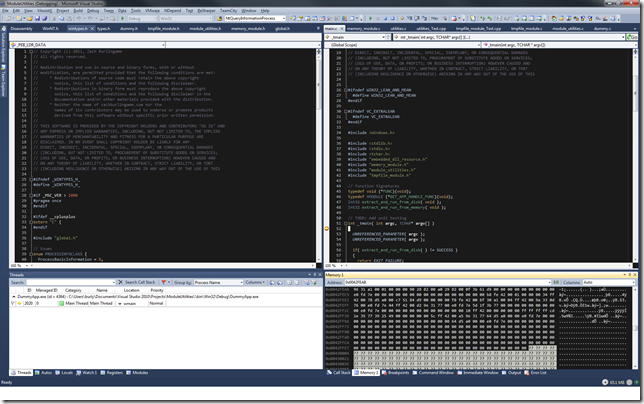

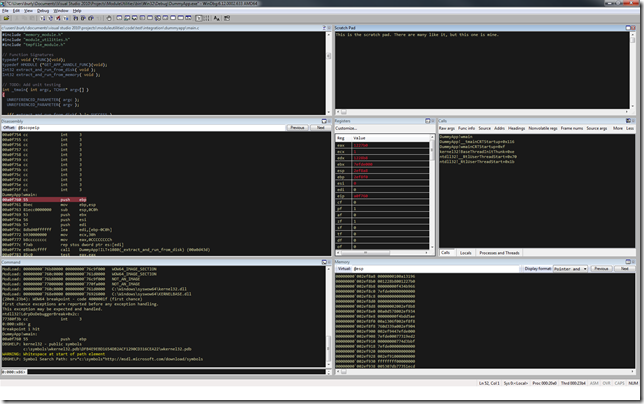

I could see by the timestamps on the two events with stop code 0xC2 (one Event ID 41 and one Event ID 1001) were referring to the same event. I referred to the MSDN documentation and the bugcheck parameters to see what type of violation had occurred. The answer is a double free: “The current thread attempted to free the pool which was already freed”. The address it was trying to double free was 0xfffffa8003649c70 and it’s pool header contents were: 0x0000000004323440. Using a kernel debugging session in WinDbg on a Windows 7 x64 target machine, we can see that what a pool header looks like:

1: kd> dt nt!_POOL_HEADER -v

struct _POOL_HEADER, 9 elements, 0x10 bytes

+0x000 PreviousSize : Bitfield Pos 0, 8 Bits

+0x000 PoolIndex : Bitfield Pos 8, 8 Bits

+0x000 BlockSize : Bitfield Pos 16, 8 Bits

+0x000 PoolType : Bitfield Pos 24, 8 Bits

+0x000 Ulong1 : Uint4B

+0x004 PoolTag : Uint4B

+0x008 ProcessBilled : Ptr64 to struct _EPROCESS, 138 elements, 0x4d0 bytes

+0x008 AllocatorBackTraceIndex : Uint2B

+0x00a PoolTagHash : Uint2B

Therefore, taking the pool header as a quadword (0x0000000004323440) we get it’s sequence of bytes as (in hex): 40 34 32 04 00 00 00 00. Placing these into the _POOL_HEADER fields, we get:

PreviousSize: 0x40

PoolIndex: 0x34

BlockSize: 0x32 (size of allocation is thus 0x320 or 0n880)

PoolType: 0x4 (session pool)

PoolTag: 0x00000000

I was hoping for a PoolTag value there to help point me in the correct direction, but alas, dead end. I also tried searching the session pool for allocations of size 0x320 on one of my Windows 7 x64 VMs but didn’t turn up anything interesting. What we do know is that the double free can be caused by either a faulty driver or memory corruption and thus our problem could be either.

Stop Code 0xD1

I turned my attention next to the 0xD1 stop code. The MSDN documentation states that this occurs when a page fault occurred at an IRQL too high (>= DISPATCH_LEVEL). Parameter 1 is the memory referenced, Parameter 2 is the IRQL at the time of the reference, Parameter 3 is the access type (read, write, or execute) and Parameter 4 is the address that referenced the memory. Applying that to these bugchecks we see:

0x000000d1 (0x0000000000000000, 0x0000000000000002, 0x0000000000000008, 0x0000000000000000)

Memory Referenced: 0x0 (NULL)

Current IRQL: 0x2 (DISPATCH_LEVEL)

Operation: 0x8 Execute

Faulting Address: 0x0

At first glace it looks like we a NULL pointer dereference in a function at the NULL page, which doesn’t make sense. Referring back to the MSDN page though we see:

If the first parameter has the same value as the fourth parameter, and the third parameter indicates an execute operation, this bug check was likely caused by a driver that was trying to execute code when the code itself was paged out. Possible causes for the page fault include the following:

- The function was marked as pageable and was running at an elevated IRQL (which includes obtaining a lock).

- The function call was made to a function in another driver, and that driver was unloaded.

- The function was called by using a function pointer that was an invalid pointer.

This seems to indicate that a faulty driver is the most likely cause of this bugcheck, although it’s plausible that memory corruption lead to this error as well.

Stop Code 0x3B

Moving on to the 0x3B stop code, I again refer to the MSDN documentation. Applying this info along with the documentation on NTSTATUS values to the 0x3B bugcheck here we have:

0x0000003b (0x00000000c000001d, 0xfffff9600007a7e0, 0xfffff8800716df50, 0x0000000000000000)

Exception: 0xC0000001D (STATUS_ILLEGAL_INSTRUCTION) – {EXCEPTION} Illegal Instruction An attempt was made to execute an illegal instruction.

Faulting Address: 0xfffff9600007a7e0

Exception Context Record (CXR): 0xfffff8800716df50

Since I don’t have a crash dump for this one, I can’t be sure. However, in my experience session specific drivers like win32k.sys and cdd.sys are generally loaded into the 0xfffff960’00000000 address range (vs. the 0xfffff880’00000000 and 0xfffff800’0000000 range that system-wide drivers are loaded into). I haven’t found any documentation on this behavior. Assuming that the address that caused this exception was in a Microsoft driver like win32k.sys or cdd.dll, it’s very unlikely that there is an illegal instruction bug in the code. Rather, this is more likely memory code corruption from a bad driver, a bad device using DMA, or bad memory.

Stop Code 0x1E

Next up was the 0x1E stop code. Glancing at it’s documentation we get:

0x0000001e (0xffffffffc000001d, 0xfffff80002f00c29, 0x0000000000000000, 0xfffffa8005476c00)

Exception Code: 0xC0000001D (STATUS_ILLEGAL_INSTRUCTION) – {EXCEPTION} Illegal Instruction An attempt was made to execute an illegal instruction.

Exception Address: 0xfffff80002f00c29

Parameter 0 of Exception: 0x0

Parameter 1 of Exception: 0xfffffa8005476c00

So a few things here. First of all, I believe that the first parameter translates to STATUS_ILLEGAL_INSTRUCTION because NTSTATUS values are 32-bits even on 64-bit architectures, so since 0xC0000001D is a negative number, it’s sign-extending the value to 0xffffffffc000001d. Second is that in my experience, modules like nt and hal get loaded in the 0xfffff800`00000000 range. Again, it seems unlikely that nt or hal would have an illegal instruction bug in them. The MSDN documentation for 0x1E speaks quite a bit about hardware issues and faulty device drivers and recommends you run a memory test. It also mentions a BIOS issue. However, back in October when I was first triaging this issue, I updated the BIOS and the release notes didn’t mention any issues that sounded related to this. Since the crashes occurred both before and after the upgrade, it’s unlikely that it is the cause here.

Stop Code 0x50

The final stop code in the list is 0x50. Taking a gander at the MSDN page we again see discussion about faulty hardware:

Bug check 0x50 usually occurs after the installation of faulty hardware or in the event of failure of installed hardware (usually related to defective RAM, be it main memory, L2 RAM cache, or video RAM).

Another common cause is the installation of a faulty system service.

Antivirus software can also trigger this error, as can a corrupted NTFS volume.

For completeness sake, the particular 0x50 bugchecks we had were:

0x00000050 (0xfffff80022fb4fe0, 0x0000000000000000, 0xfffff80002e5586b, 0x0000000000000002)

Memory Referenced: 0xfffff80022fb4fe0

Operation: 0x0 (Read)

Faulting Address: 0xfffff80002e5586b (likely nt or hal module)

0x00000050 (0xfffff12014be480c, 0x0000000000000001, 0xfffff8800121899d, 0x0000000000000007)

Memory Referenced: 0xfffff12014be480c

Operation: 0x1 (Write)

Faulting Address: 0xfffff8800121899d (unknown)

That 0xfffff12014be480c looks suspicious to me, because in my experience, I don’t see many memory allocations in the 0xfffff120’00000000 range.

Where to next?

Now that I had exhausted the bugcheck information from the Windows Event Logs and still didn’t have a clear answer, where do I head next? Stay tuned for Part 2 where I start digging into the crash dumps in more detail.